In this How-To series, I want to share my experience with machine learning models in productions environments. This starts with the general differences to typical software projects and how to acquire and deal with data sets in such projects, goes through how to manage pre-processing, training of data, persist data pipelines, the management of models and ends with the monitoring of ml systems.

This How-To has three parts:

- Data Acuicision: General differences to usual software engineering

- Consistent Preprocessing: Understanding why preprocessing and models should be bundled

- Monitoring: How and what metrics to monitor

Difference to non-data-driven projects

Machine learning projects, either deep and shallow learning approaches, are somewhat different to typical software engineering projects. Typical modern software projects tend to be as much as agile, as possible. In contrast, ML projects limit agility. They are iterative projects too, but they require a more structured processes, especially if data acquisition is part of the project.

While all tutorials in machine learning frameworks use prepared data sets and focus in the modelling part, in the industry data sets are rarely prepared and cleaned up. Sometimes you don´t even have collected the data into a usable data set. In almost every project data preparation (and understanding) is one of the most time-consuming parts.

The first step is to collect the data. Sounds easy, but the selection of the data is an important step and should be performed wisely. In general, there are many obstacles to this process, as the data generated in a company is as complex as the underlying business processes. The basic tenor should be: Don´t throw any information away, you might need it later for data cleaning. Data validation is another point, which should be performed with external input. Sometimes the data tells us something, which can only be understood, when asking experts (software or business).

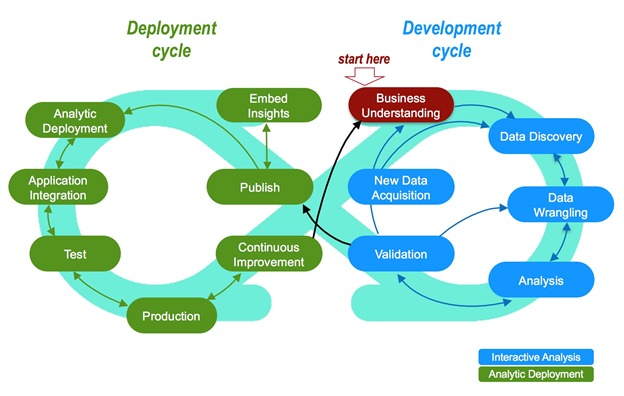

If you would ask me how this process could look like, I would refer to ASUM-DM, which is a process describtion of machine learning projects, basically from IBM and inspired by CRISP-DM. For the sake of completness here is an visualization, I don´t want to go more into detail:

Source: https://www.kdnuggets.com/2017/02/analytics-grease-monkeys.html

Data = Code

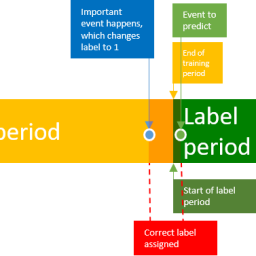

Even more interesting is the fact that the program code is not the source of “business logic” anymore. While there is still a lot of code neccecary to build a product containing a ml-model, the rules for decicions ly in the trained model itself. As the model is based on training data, the first implication is that data is the source of business logic. It should be obvious that this data has to be persisted as a snapshot for every model training, if the data source is not capable of event sourcing. Usually data sources are data bases, files or event streams, so they are dynamic, and you cannot get back to any state in time. Furthermore, IT systems are coupled, and future changes might change the structure of the data completely. While this has several impacts to machine learning systems in general, it is also fundamental to the data acquisition.

Consider you are building a ml pipeline where your data is directly read from the database, preprocessed and trained. The outcome is a working model. Four weeks later you run the same process and your new model does not work anymore. Probably you can go through the code and maybe you find out that the code did not handle a changed field. But it is also possible that some adjustments have been made, which changed the existing data in the data source. For instance, null values have been added, the mean, variance changed or a feature changed to a self-fulfilling prophecy. In this case it is hard to find the reason, if you don´t have the old data set.

Identify invalid data early

An ongoing process is checking for wrong data. In almost every real-world data source there are some invalid or highly biased data points. Industrial sensors are broken and produce wrong data. Text data is crisscrossed by spam bots and images are mislabeled. To train a good classifier you need good data or at least a high percentage of good data. Finding such data is highly domain depending and therefore not covered here.

Have a reserve validation dataset

In shallow learning it is common to use a k-fold cross validation technique to estimate overall model quality and fine tune hyperparameters. Sometimes it happens that your data is sparse. In this case it often makes sense to train your model with as much data as possible to get close to real-world data. The selected model from k-fold-cross validation is then trained on all data. To proof the model’s performance, you should have a extra validation dataset. This validation dataset can be quite small and should consist of samples, that are required to be predicted correctly for project success (manual selected valuable entries).

In deep learning it’s also a good idea, even if it´s more time consuming to create an extra validation dataset with enough variance. In the end it will pay out, as you don´t fall into the trap of optimizing a model, which does not solve the problem, you want to. Think about all the examples, where a deep learning classifier achieved a high accuracy, but fails on the very important samples. Anrew Ng recommends to have such a validation data set in his book: Machine Learning Yearning.

[…] After weeks of training and optimizing a neural net at some point it might be ready for production. Most deep learning projects never reach this point and for the rest it’s time to think about frameworks and technology stack. The are several frameworks, which promise easy productionalizing of deep neural nets. Some might be a good fit, others are not. This post will not about these paid solutions, this post is about how to bring your deep learning model into production without third-party tools. This post shows an example with flask as a RESTful API and and a direct integration in java for batch predictions. This post focuses on deep neural networks, for traditional ML, take a look at this series. […]