This is the last post in the series about machine learning in practice. This time the post will be about productionizing machine learning models. I want to share my experience from several production machine learning systems and show how it is possible to make them reliable.

In the last two posts, I showed how to create datasets and how to make sure preprocessing pipelines are consistent. While these steps focus on the reproducibility of ml pipelines, there is still something important missing. After validating and deploying a machine learning software product, the outcome should be monitored continuously to ensure the system is working as intended and to detect possible drifts in the underlying data. The difference to other software systems, is not just to check availability and performance in response rates and timings, but also monitor predictions itself.

Three types of models

The monitoring depends directly on the nature of the model. I differentiate between three types:

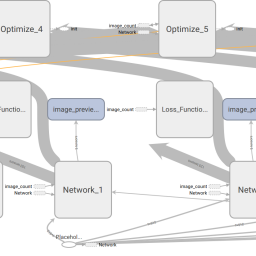

- Manual trained models. These models are completely supervised by a person. In this case the monitoring is focusing on the prediction performance only. Examples are deep neural networks and other complex or unstable models, which can not be trained without human interaction.

- Automatically retrained models. These models are easier to re-train. They are usually used in scenarios, where the training data grows over time, so that the model performance also improves with the data. In this case the training process itself should be monitored as well. An example would be decision trees, SVMs or k-Means clustering.

- The last type of models are online learning models, they usually learn every time they make predictions. They apply a feedback loop to improve over time. These models should be re-validated on separated data sets in intervals. Examples are reinforcement learning or online classifiers.

Metrics to track

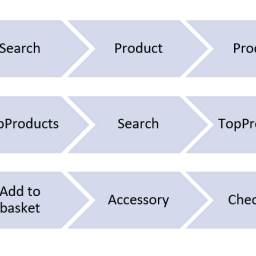

For every model predictions should be tracked. Depending on the usage the way to make this happen, is different. In cases where the model is used in batch processing jobs, it is easier to track predictions. In cases of real-time predictions, it may be feasible to sample the predictions or apply moving averages.

But no matter what type of application, general metrics are:

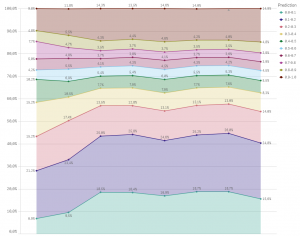

- Class distribution of predictions: Which class/value is predicted how often? Do not only track the mean prediction, track the distribution of predictions (histogram of probabilities).

- Amount of predictions: How many predictions have been made? Make sure to track sub categories, if applicable.

- Specific metrics from preprocessing: This is hard to generalize, but as an example in text classifiers, the number of words per document.

When the model is trained automatically:

- One score for evaluation: Make sure you have one score, which describes your model quality (e.g. Accuracy, F1, AUC, MSE)

- In case of classification: Store your ROC, confusion matrix and relevant metrics

- Track feature importance, training and testing times

- Store hyperparameters, if you use grid search

A time series database (e.g. Prometheus) is the best place to store this data. In tools like Kibana/Grafana a dashboard can visualize these metrics.