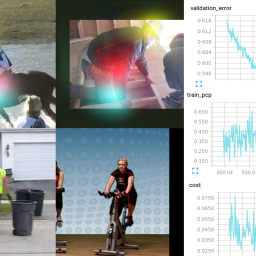

This post is about how to snapshot your model based on custom validation metrics.

First we define the custom metric, as shown here. In this case we use the AUC score:

import tensorflow as tf

from sklearn.metrics import roc_auc_score

def auroc(y_true, y_pred):

return tf.py_func(roc_auc_score, (y_true, y_pred), tf.double)

Now we compile the model:

model.compile(loss='binary_crossentropy', optimizer='adam',metrics=['accuracy', auroc])

Now we use the keras ModelCheckpoint to save only the best model to /tmp/model.h5. monitor tells Keras which metric is used for evaluation, mode=’max’ tells keras to use keep the model with the maximum score and with period we can define how often the model is evaluated. Note that the metrics are prefixed with ‘val_’ for the validation step.

from keras.callbacks import ModelCheckpoint checkpoint = ModelCheckpoint(filepath='/tmp/model.h5',save_best_only=True, monitor='val_aucroc', mode='max', period=5, verbose=1) model.fit(x_train, y_train,validation_data=(X_test, Y_test),batch_size=128, epochs=20, verbose=1, callbacks=[checkpoint])

If you want to validate on a different dataset or do some more advanced stuff, you can always use a custom callback:

import Callback from keras.callbacks

class MetricsCallback(Callback):

def on_epoch_end(self, epoch, logs=None):

if epoch % 10 == 0:

x_test = self.validation_data[0]

y_test = self.validation_data[1]

predictions = self.model.predict(x_test)

# do whatever you like

very good post