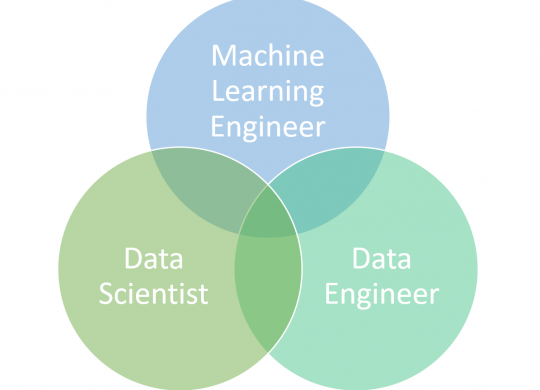

In the past years a new job role has become very popular (at least I noticed it in job postings and media). The machine learning engineer. But what is the difference between this new job role and data engineering or…

Big Data

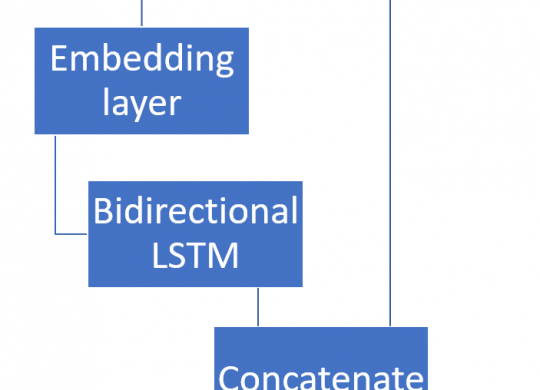

In this post I will show how to combine features from natural language processing with traditional features (meta data) in one single model in keras (end-to-end learning). The solution is a multiple inputs model. Problem definition Scientific data sets are…

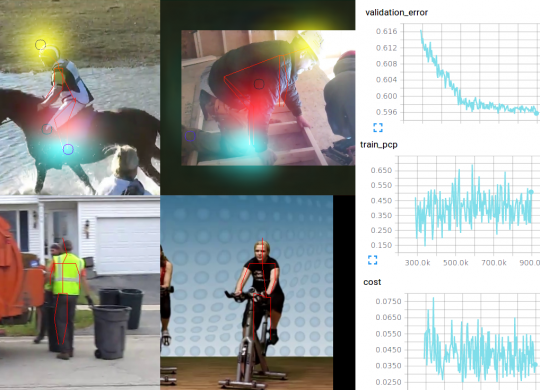

In the last months I was working on a deep learning project. The goal was to dig into Tensorflow and deep learning in gerneral. For this purpose I re-implemented a paper from January 2016 called convolutional pose machines , which uses deep…

When running Spark 1.6 on yarn clusters, i ran into problems, when yarn preempted spark containers and then the spark job failed. This happens only sometimes, when yarn used a fair scheduler and other queues with a higher priority submitted…

After getting good results with the Random Forest algorithm in the last post, we will take a look at feed forward networks, which are artificial neural networks. Artificial neural networks consist of many artificial neurons, which are based on the…

In the previous post i showed how to use the Support Vector Machine in Spark and apply the PCA to the features. In this post i wills show how to use Decisions Trees on the titanic data and why its better…

In my previous post i showed how to increase the parallelism of spark processing by increasing the number of executors on the cluster. In this post i will try to show how to distribute the data in a way, that the cluster…

This ist the third part of the Kaggle´s Machine Learning by Disaster challenge where i show, how you can use Apache Spark for model based prediction (supervised learning). This post is about support vector machines. The Support Vector Machine (SVM) is…

The naive bayes classification is a probabilistic classifier, which is used to classify a feature vector to a class. It does a probably wrong assumption, that features are statistically independent to each other. Anyway Apache Spark has implemented Naive Bayes and i…

In Apache Spark the key to get performance is parallelism. The first thing to get parallelism is to get the partition count to a good level, as the partition is the atom of each job. Reaching a good level of…